An Objective Look at Radiocarbon Dating

In a 2021 interview with the Albright Institute, Prof. Israel Finkelstein extolled the merits of radiocarbon dating in archaeology. “We need to turn to radiocarbon, to carbon-14 dating,” he said. Using carbon dating allows us to “disconnect the discussion from one’s understanding of the biblical verses because radiocarbon is not influenced by the Bible.”

Professor Finkelstein is well known as a leading advocate of the “low chronology” dating of the United Monarchy of Israel. According to this theory, the monumental Iron iia structures traditionally dated to the reigns of David and Solomon in the early 10th century b.c.e. were actually constructed almost a century later—sometime during the ninth century b.c.e. Finkelstein has long claimed his low-chronology position can be proved by carbon dating.

But there’s a problem: Professor Finkelstein’s opponents on this issue—those who advocate high chronology (the traditional dating of the structures)—also use radiocarbon dating to support their argument. In fact, radiocarbon dating has been widely used, especially over the last decade, as evidence supporting the traditional dating of the United Monarchy chronology.

The late Dr. Eilat Mazar, for example, used carbon dating in her excavations in the City of David to identify King David’s palace. (Dr. Mazar may have been the first to use the technology in a Jerusalem excavation.) Her colleague, Hebrew University professor Yosef Garfinkel, is also a proponent of radiocarbon dating and has utilized the technology to identify various impressive “Davidic”-era sites. According to Prof. William Dever, carbon dating—rather than proving Finkelstein’s theory—actually delivers a “deathblow” to the low-chronology theory.

What a conundrum! How can all sides of the spectrum of the debate use radiocarbon dating to prove entirely different positions on the dating of the United Monarchy?

One answer points to a crucial yet widely overlooked reality: Radiocarbon dating is not the incredibly accurate, entirely independent, purely scientific and objective means of dating it is often portrayed to be!

There’s no doubt that radiocarbon dating can be a fantastic tool in archaeology. It’s one we advocate as a means of corroborating dates and have employed in our own archaeological work with Dr. Mazar. And it’s a tool we will continue to use and advocate. But both the science and the practice of carbon dating are far from perfect. This means carbon dating is not the standalone, silver-bullet solution it is often portrayed to be. The truth is, radiocarbon dating is inherently based on numerous assumptions and some imperfect science and math. Beyond that, like all data, it is vulnerable to being misinterpreted, misconstrued and mishandled.

Let’s take an objective look at radiocarbon dating.

A Chemistry Lesson

First, what is radiocarbon dating? Radiocarbon dating, also known as carbon dating, carbon-14 dating, C-14 or 14C, was invented by American physical chemist Willard Libby in the 1940s. This form of dating is just one of a broad range of scientific dating methods known collectively as radiometric (or radioisotope) dating. Carbon dating is typically used to determine the age of “younger” material—that is, organic material up to 50,000 years old. Among the various forms of radiometric dating (e.g. uranium dating, samarium-neodymium dating), radiocarbon dating is widely considered the most reliable.

Put simply, carbon dating determines the age of material by measuring the levels of carbon found in it. Carbon dating can be used to date only organic matter—such as bones, seeds, grains, natural fabrics or charcoal. Inert materials, such as stones or clay objects, cannot be carbon-dated.

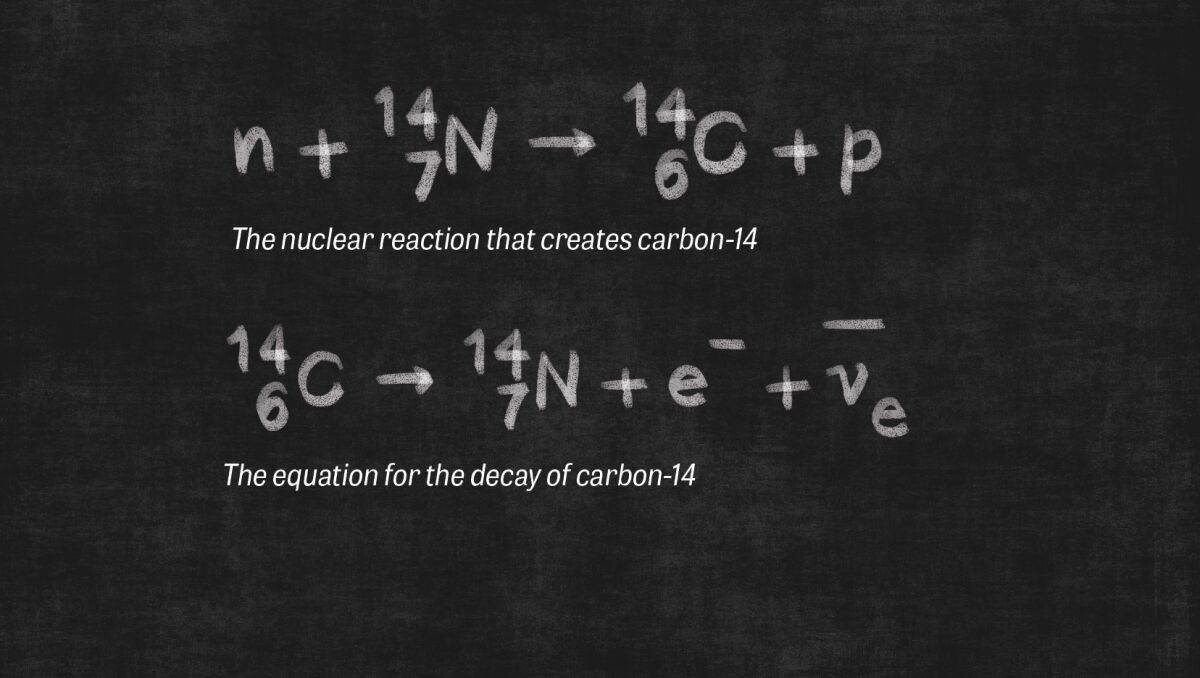

Organic material contains different types of carbon: carbon-12 (C-12) and carbon-14 (C-14). In nature, the majority of carbon atoms have a nucleus containing six protons and six neutrons: the stable carbon-12. But in some carbon atoms, solar radiation causes the atom to acquire two extra neutrons. These radioactive isotopes are called carbon-14.

The number of C-14 atoms on Earth is tiny compared to the number of C-12 atoms. About one out of every trillion carbon atoms is a C-14 atom. All living organisms, including plants, animals and humans, contain these C-14 atoms, which are absorbed into the living organism with carbon dioxide from the atmosphere. As long as an organism is living, the C-14 ratio in that living organism should equate to the C-14 ratio in the atmosphere. When the organism dies, however, carbon is no longer being absorbed. And while the stable C-12 in the organism remains the same, the C-14 isotopes begin to decay. The steady, constant decay of the radioactive C-14 presents scientists with the potential opportunity to measure time.

This radioactive-decay process is known as a “half-life.” The technical website Labmate Online offers a good definition of this term: “Half-life refers to the amount of time it takes for an object to lose exactly half of the amount of carbon (or other element) stored in it …. The half-life of carbon is 5,730 ± 40 years, which means that it will take this amount of time for it to reduce from 100g of carbon to 50g—exactly half its original amount.

“By testing the amount of carbon stored in an object, and comparing it to the original amount of carbon believed to have been stored at the time of death, scientists can estimate its age.”

Sounds straightforward, doesn’t it? But it isn’t. In order for scientists to calculate how long an organism has been dead, they need two crucial bits of information. First, they need to know how much C-14 is in the dead organism. This can be readily measured by using what is known as a mass spectrometer. Second, the scientist must know how much C-14 was in the organism when it was alive. This is where it gets difficult—really difficult.

Scientists thousands of years ago, of course, weren’t measuring and documenting the C-14 ratio of organisms when they died. The hard data isn’t available. So how do scientists determine this crucial measurement?

Making Assumptions

Lacking the true figures, scientists make a series of assumptions.

The science of radiocarbon dating is built on a theory called uniformitarianism, or doctrine of uniformity. This is the theory that throughout history, changes on Earth have happened in a generally slow, consistent, uniform manner. This theory postulates that the Earth’s processes “acted in the same manner and with essentially the same intensity in the past as they do in the present” (Encyclopedia Britannica). This theory stands in contrast to the theory of catastrophism (the biblical model), which suggests that Earth’s history has been shaped by cataclysmic changes—events that could, for example, radically alter atmospheric carbon ratios.

To answer the fundamental question of how much C-14 was in an ancient organism at the moment it died, scientists, relying on the theory of uniformitarianism, assume that the amount of C-14 in the atmosphere has remained generally constant throughout history.

This relies on the assumption that the constant level of atmospheric C-14 reached its “equilibrium” early on in Earth’s history. Labmate explains: “By measuring the rate of production and of decay … scientists were able to estimate that carbon in the atmosphere would … [reach] equilibrium in 30,000–50,000 years. Since the universe is estimated to be millions of years old, it was assumed that this equilibrium had already been reached.”

These assumptions, built into the science of radiocarbon dating from its inception, are fraught with problems. For example, scientists discovered in the 1960s that the C-14 growth rate was actually significantly higher (by almost one third) than the decay rate. “This indicated that equilibrium had not in fact been reached, throwing off scientists’ assumptions about carbon dating” (ibid).

That’s not all. Not only is there not an observable equilibrium of carbon-14 in the atmosphere, scientists have also discovered that the production and decay rates of carbon-14 have fluctuated over time. (This truth was actually identified by Willard Libby, but since his findings contradicted the perceived uniformitarian model, the discrepancies were dismissed as “experimental error.”)

How do you estimate historical C-14 levels if they are constantly fluctuating?

B.P.—the New B.C.

Logically, what is needed first is a clear starting point to compare past ratios against—a fixed, “modern” ratio of carbon-12 to carbon-14. This starting data point was determined in the late 1950s.

A “large quantity of contemporary oxalic acid dihydrate was prepared” and sampled as a reference value for atmospheric C-12 to C-14, chemistry expert Lloyd A. Currie explained in “The Remarkable Metrological History of Radiocarbon Dating II” (Journal of Research of the National Institute of Standards and Technology, April 1, 2004). This sample, which relates specifically to the year 1950, would serve as a “standard” modern ratio to compare historic C-12 to C-14 ratios against. “This value is defined as ‘modern carbon’ referenced to a.d. 1950. Radiocarbon measurements are compared to this modern carbon value … using the exponential decay relation and the ‘Libby half-life,’ 5,568 a. The ages are expressed in years before present (b.p.), where ‘present’ is defined as a.d. 1950” (ibid).

For example, a radiocarbon date of 3000 b.p. is technically 3,000 years prior to c.e. 1950, which is 1051 b.c.e. (taking into account there is no year zero).

By establishing the carbon ratios in 1950, scientists created a benchmark against which they could measure historical levels. It’s a good idea, but not perfect. And once again, certain assumptions were required to settle on this all-important benchmark number, calling into question its integrity. For example, in this 1950 sample, C-14 “concentration was about 5 percent above what was believed to be the natural level, so the standard for radiocarbon dating was defined as 0.95 times the 14C concentration of this material,” Currie wrote (emphasis added throughout).

Scientists also discovered that even the original C-14 half-life calculation of 5,568 years is “off.” The now-determined value is 5,730 years (again, ± 40 years), or nearly two centuries more than the traditional figure. Even though it is known to be incorrect, scientists today still use the “Libby half-life” figure of 5,568 years. Why? So they can maintain consistency with early radiocarbon figures; the final figures are then calibrated to compensate for this miscalculation.

All this math and chemistry can be hard to follow. The point is this: The scientific premise of radiocarbon dating is not built entirely on hard facts or absolutes. It is built, at least partially, on educated guesses and assumptions.

There are other variables that also need to be considered and factored in.

Other Variables

Perhaps the most famous factor impacting radiocarbon dating is the atmospheric nuclear weapons testing that has taken place over the last 80 years. Radioactive isotopes from these tests have “infected,” at least on some level, every living organism on the planet, causing a rise in carbon-14 levels (and resulting in a generally younger-than-expected face-value dating).

This is one benefit of using the 1950 data set as the standard—because it antedates much of this nuclear contamination (but not all). When it comes to man’s impact on C-14 levels, nuclear testing is only the tip of the iceberg. In the Industrial Era, for example, the burning of fossil fuels released enormous quantities of C-14-depleted carbon dioxide into the atmosphere.

And it’s not just modern technology that affects the atmospheric carbon balance. As noted, the ratio has never been constant, and neither has the decay rate. This is because all sorts of natural phenomena influence carbon levels, thereby undermining the assumptions used in radiocarbon dating.

Cosmic rays, as well as solar flares and sunspots, directly affect the amount of C-14 in the atmosphere. So does our solar system’s passage through the Milky Way’s magnetic clouds. Fluctuations in the Earth’s own magnetic field play a role in C-14 levels, including phenomena such as geomagnetic reversals and polarity excursions (either global or localized). Even simple changes in the seasons affect C-14 decay rates: Depending on what time of year an organism died, this may affect its dating by decades.

The list goes on. Volcanoes emit C-14-depleted CO2 into the atmosphere, contributing to an older-than-reality dating of affected organic material, particularly in volcanic regions (like Santorini). Glaciation is another variable; carbon stored in glaciers is depleted in C-14, giving the organic material an older-than-actual age.

Carbon-14 rates can even vary depending on which part of the planet the sample is taken from. C-14 levels are typically more depleted in the southern hemisphere, resulting in artificially older ages. Related to this is the “island effect.” Scientists postulate that the carbon-dating of material from islands, which are surrounded by masses of water, result in older-than-actual dates. Again, all of these variables must be calibrated into radiocarbon calculations and models.

The issues related especially to water and water-related objects only compound. Chief among them is the “marine reservoir effect.” Carbon ingested and absorbed by marine organisms is typically much older than that consumed by terrestrial organisms. This is known to cause deviances in carbon dating of hundreds or potentially thousands of years. Water temperature and depth also play a role in carbon absorption. Then there’s the “hard water effect.” The carbon content in rivers and groundwater is impacted by the type of rocks the water flows over. As an example, mussels that are still alive have been carbon-dated to over 2,000 years old due to their exposure to water depleted of carbon-14 via contact with limestone and humus soil.

This might seem peripheral to the field of terrestrial archaeology. Yet it does present a real-world issue, particularly as it relates to terrestrial organisms that consume marine animals—including humans. One famous example is that of a Viking burial in Derbyshire, England. Coins at the site, among other contextual material, clearly dated it to the ninth century c.e. Yet carbon-dating of human bones suggested they were centuries older. The riddle was finally solved in 2018, when scientists realized that the discrepancy was caused by the seafood-rich, C-14 depleted, diet of the Vikings—making the bones appear older.

Calibration to the Rescue

Due to all these variables, raw radiocarbon data must be calibrated by scientists through outside means—other methods of establishing carbon levels through history (such as dendrochronology). This data is then used to construct a calibration curve, the model through which radiochronologists produce their dates. But even this can introduce its own bias and error.

One method of creating a calibration curve is through the carbon analysis of artifacts of known ages. Based on written testimonies and chronologies, carbon samples from sites of known ages can be tested and compared, and the results can then be calibrated and used for dating artifacts of unknown ages.

Following his invention of the science, Willard Libby originally attempted to use this method to check against the accuracy of his raw radiocarbon data. In his 1960 Nobel Prize acceptance lecture, Libby highlighted the immediate issue his team came up against: the ambiguity of historical ages. “You read statements in books that such and such a society or archaeological site is 20,000 years old,” he said. “We learned rather abruptly that these numbers, these ancient ages, are not known accurately; in fact, it is about the time of the First Dynasty in Egypt [circa 3000 b.c.e.] that the first historical date of any real certainty has been established.” (Still, there remains much debate even about the dating of this period.)

Certain historical events are typically cited as being of known age. For example, relating to ancient Israel, we have the 732 b.c.e. invasion of Tiglath-Pileser, the 701 b.c.e. invasion of Sennacherib, and the 586 b.c.e. destruction of Jerusalem. The easily datable, often burned organic remains from such destruction levels can be used as an adjustment and calibration to radiocarbon dating (as can organic remains from sealed, historically datable tombs).

Yet even here, we have problems. Even among generally agreed-upon benchmarks, there is naturally doubt and debate. For example, based on the biblical text and other evidence, there is a significant position that Sennacherib’s invasion of Judah took place around 710 b.c.e. There is also debate about the exact year that Jerusalem fell. While the dates may not differ dramatically, they illustrate the problems with benchmarks. As such, archaeologists view historically calibrated carbon dates with an air of suspicion.

There is another, more common way of calibrating radiocarbon dates—a method also used in an attempt to extend the dating back into the realm of prehistory. This is dendrochronology, the science of dating according to tree rings (see sidebar “Dendrochronology” below).

To this point, we have noted the complications with the methodology of radiocarbon dating. What about the sample material being tested?

Problems in Datable Material

Again, carbon dating can only be used to date organic, living (or once-living) things. A typical example would be the discovery of a bone in a certain context. Let’s assume that our dating method is perfect. Even if the science and methodology are flawless, dating the bone with any degree of certainty is still a major challenge.

Radiocarbon-dating the bone only tells us when the creature died. It does not reveal its age at death, or when exactly it was buried or, perhaps, reburied.

This is a real-world archaeological problem. Scavenging animals have a habit of picking up, transporting, burying and reburying bones. (Of course, even humans have a habit of exhumation and reburial.) Because of these factors with bones, scientists prefer, whenever possible, to use seeds or other small organic deposits for dating.

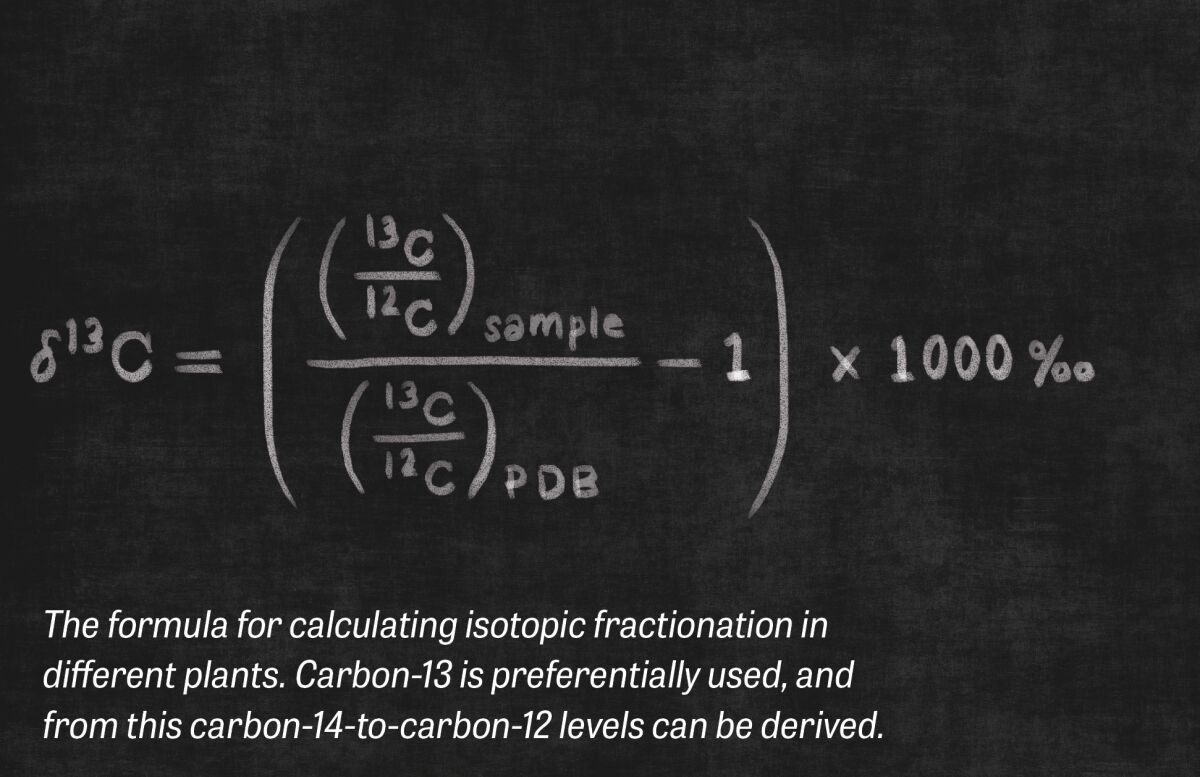

But carbon-dating plant matter comes with its own headaches. This is because flora, though they feed off carbon dioxide, actually discriminate against C-14, absorbing less than animals (and thus giving off an older-than-actual date). Not only that, different plants selectively absorb different amounts of C-14. Thus, the ratios of carbon isotopes will not necessarily reflect existing atmospheric ratios while that plant is alive. This is known as “isotopic fractionation.” And this not only needs to be properly accounted for in terrestrial plants, it must also be accounted for in consumable marine plants.

What about wood remains? The problem with wood is that it can be used and reused over centuries. This means that wood samples, including charcoal remains—once considered radiocarbon-dating gold—are also not necessarily ideal. Another issue with wood is that it can be difficult to know which part of the tree the sample came from. If it came from deep inside the trunk, for example, it would be old. If it came from the outside, depending on the type of wood, it could potentially be hundreds of years younger. Scientists refer to this as the “old wood effect.”

There is also the issue of human error during the collection, storage and testing of organic samples. It is incredibly easy to contaminate a sample, either during excavating, packaging or handling. Even the slightest contact with paper or cotton wool, for example, can contaminate a sample with modern carbon. Depending on the age of the artifact, even a single-digit percentage of contamination can measurably throw off the result by decades or centuries.

It should be noted, too, that a single sample can be tested in different laboratories and produce different results, based on different standards and calibration. As such, it is standard practice to send samples to several different labs as a check on the reliability of the results.

Finally, human agenda can also pose an issue. It is not rare for the scientist doing the carbon dating to ask the archaeologist who submitted the artifact how old he or she expects the sample to be. If carbon dating is so reliable, why would this be necessary? Of course, carbon dating is not reliable by itself, and it is common for testing to produce anomalous dates. So, in an effort to prevent this, scientists will often prefer to test with at least a ballpark date in mind.

Another point worth noting is that, from time to time, rumors circulate within the scientific community about radiocarbon-dating specialists adjusting their conclusions to suit the agenda or argument of the archaeologist. Archaeologists will often send similar samples to different labs to mitigate this possibility.

Where Does That Leave Us?

As we have seen, radiocarbon dating is far from being a clear, fixed, unbiased, independent and reliable form of dating. Undoubtedly, it does represent a remarkable development in modern science and math. And thanks to modern calibration attempts scientists have been able to attempt to iron out numerous “wrinkles” in the dating of various periods in history.

A key issue highlighted by these manifold problems and ever increasing “effects” being discovered that require additional calibration is that we don’t know what we don’t know. In a field built upon multiple assumptions, with constantly doctored and changing data, how do we know that everything has been accurately accounted for? (See the sidebar for a recent example of how this relates to archaeology of the United Monarchy.)

In 2001, Prof. Amihai Mazar presented the results of what then constituted one of the largest batches of carbon-14-dated material from the Iron Age Levant. The results came from his excavations at Tel Beth Shean and Tel Rehov. Was it worth it? He presented his conclusions, in relation to the question of the United Monarchy and the claims then being made by Professor Finkelstein and his associates, in Radiocarbon (Vol. 43).

“The chronological debate concerning the 10th–9th centuries b.c.e. in Israel is over a time range of between 50 and 100 years. … [C]alibrated 14C dates sometimes [give] a time range that is too wide or ambiguous for the problem to be solved,” Professor Mazar wrote, based on certain of the ambiguous (or entirely erratic) carbon dates received. “Changes in recent versions of calibration curves imply that calibrated date ranges may yet change for samples of interest to chronological questions involving a time span of only 50–80 years.”

“Debates over the dates of archaeological strata are unavoidable,” Mazar noted. “The current debate over the 10th–9th centuries b.c.e. is an excellent case study. Yet it seems that there is a long way to go before the final word will be said in this debate.” It has been a while since Mazar presented his paper—yet even with advancement in this field, these conclusions still hold, to a large degree (again, note the sidebar, “A Study in Point”).

Traditionally, for the past century, comparative pottery analysis has been used to give dates to archaeological sites. If anything, carbon dating has proved that many of the traditional methods of dating are, in fact, the most accurate—not to mention they come with additional benefits. Take pottery, for example. Pottery is plentiful in an excavation, it does not decompose, and with an archaeologist skilled in pottery reading, it can be dated with generally the same level of accuracy as radiocarbon. In some cases, it can be dated with even greater accuracy. What’s more, it can be done freely, and without waiting potentially weeks for a turnaround result (receiving dates promptly is particularly important in the course of an excavation). Certainly, radiocarbon dates, properly selected and dated, can serve as a good check, in tandem with pottery dating.

Will science produce a more accurate form of dating? We’ll see. Even now there is a new potential method in its earliest phases of research: archaeomagnetism. Yet sometimes, the simplest ways are the most effective.

Prof. Gabriel Barkay summed up radiocarbon dating rather flamboyantly: “Carbon-14 is like a prostitute. Given the margin of error, radiocarbon allows everyone to argue the position they already hold.” Prof. Bruce Brew put it this way: “If a C-14 date supports our theories, we put it in the main text. If it does not entirely contradict them, we put it in a footnote. And if it is completely out of date, we just drop it.”

These are hardly confidence-inspiring endorsements of the radiocarbon-dating method.

Sidebar: A Study in Point

Bible-believers are occasionally criticized for undermining trust in carbon dating. Critics claim that biblical archaeologists dislike radiocarbon dating because it undermines the traditional dating of biblical events (such as the low-chronology debate regarding the United Monarchy).

In fact, carbon dating performed over the last 10 years or so has actually served to corroborate the traditional dating of many events. To learn more about this, you can study the work of Hebrew University professor Yosef Garfinkel and esteemed archaeologist and historian William Dever, among others.

But it’s not rare for Bible critics to attempt to use carbon dating to cast doubts on dates consistent with the Bible and recalibrate the dates to be younger. In 2018, for example, Cornell University released a provocative paper titled “Fluctuating Radiocarbon Offsets Observed in the Southern Levant and Implications for Archaeological Chronological Debates.”

Using data collected from tree rings in the Southern Levant from the past 400 years, the Cornell study argues that the standard calibration curve for carbon-14 is off by about 20 years, and that carbon samples taken in the Holy Land should be calibrated by a separate system. From their data set, they deduce that the dates associated with the carbon samples in this region are, on average, about 20 years younger than previously estimated.

Twenty years doesn’t seem to be a lot; it still puts various “Davidic” samples (such as at Khirbet Qeiyafa) inside the dates for the United Monarchy (though beyond the chronological extent of David’s existence). Still, the contention that all carbon samples in the area have been (and will be) dated to be 20 years too old further muddies the waters when it comes to using carbon samples to accurately date discoveries.

Reading through the Cornell paper, the bias of the researchers is obvious. Their conclusions are based upon an extremely limited data set, yet they hastily extrapolate the findings to apply to biblical times—and not just any time, but squarely the time period relating to the United Monarchy.

For example, the study itself was conducted on tree rings that go back only 400 years. In that time period, they do see variations that indicate the traditional dates were off by an average of 19 years—but in many cases, they were off by only as much as five years. Furthermore, they deduce that possibly warmer weather tended to make the samples appear older than colder weather did. Based on that, they conclude that since it may have been warmer in the Southern Levant from 1200 to 600 b.c.e., we should assume that the dates for the biblical period are likely younger than previously thought.

Consider the following sentence (taken from the study), and notice the remarkable number of qualifying words employed by the authors: “Where such calibration time series are not yet available (namely, before a.d. 1610 for the Southern Levant case at present), our data set better indicates the circumstances under which a likely potential range of error may apply for earlier periods—assuming that similar conditions and processes apply in earlier periods and accepting some possible variations—rather than offering any specific average correction factor” (emphasis added throughout).

To their credit, these researchers admit their methodology and analysis is far from perfect.

In this next sentence, the researchers note the ambiguity as to whether or not it was warmer during the biblical period, as they suggest: “Available paleoclimatic data for the Southern Levant for the earlier Iron Age are inconclusive, but, after indications of cooler and arid conditions in the period around the close of the Late Bronze Age through initial Iron Age, there are some (though not always consistent) suggestions of wetter and/or warming conditions in the Eastern Mediterranean region.”

By their own admission, there is a lot of guesswork and estimation. In spite of this, these researchers frame the whole report, including the title and abstract, around how the data might “potentially undermine” the traditional position in the chronological debate regarding David and Solomon. The press then takes it one step further and writes headlines like: “Cornell University professor shows how archaeologists’ data could be skewed by decades—potentially disproving the narrative of David and Solomon’s United Monarchy” (Times of Israel, June 7, 2018).

The reality is, the science and argumentation used to prove the traditional dates incorrect is very often more flawed than the science and argumentation used to determine the traditional dates!

Sidebar: Dendrochronology

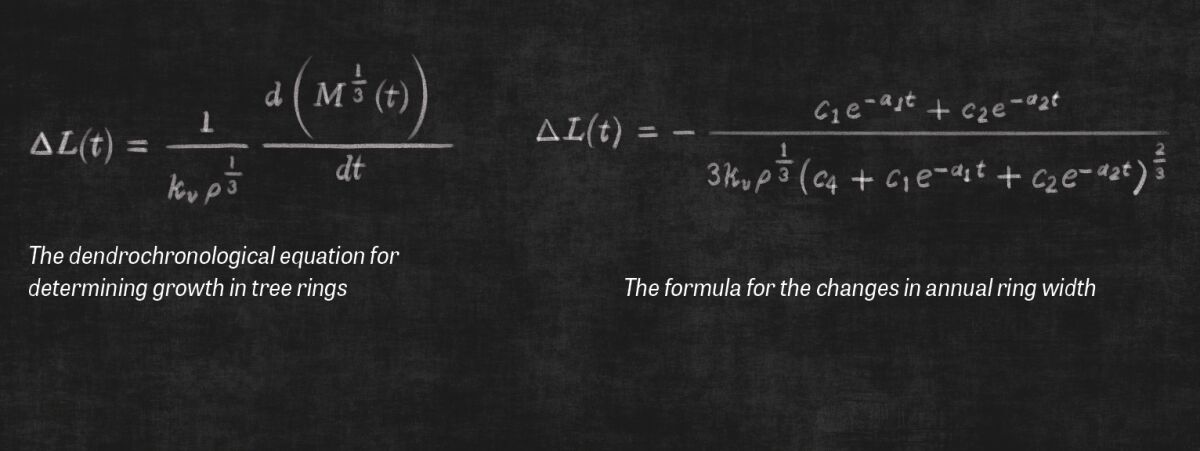

By either cutting a tree in half or boring out a core sample, tree rings may be counted—with each ring representing a year (or rather, a full season-cycle of growth), to determine the age of a tree. This is known as dendrochronology. Trees of different ages can then be sampled for carbon as a check against, and a calibration of, radiocarbon dates.

The oldest known tree is a bristlecone pine in California’s White Mountains, named Methuselah (after the oldest man in the Bible). This tree has about 4,850 tree rings—thus, it is conceivably 4,850 years old. Dendrochronologists have attempted to extend the age of trees far beyond the germination of the Methuselah tree. For example, they have lined up the tree rings of living bristlecone pines with tree rings of dead bristlecone pines to construct a sort of “tree ring chronology” going back around 12,500 years. Dendrochronologists visually compare the appearance of growth rings to one another, trying to match living and dead trees. Through this comparative analysis of tree rings, dendrochronologists have been able to create a theoretically reliable “ring history.”

Still, cross-matching tree rings is incredibly complicated. Every tree grows a little differently, so visually cross-matching tree rings is actually quite subjective. Furthermore, tree-ring patterns are not unique, and even trees growing right next to one another do not exhibit identical growth patterns.

And contrary to popular belief, tree rings do not simply work on a year-for-year principle. Stresses on the tree, such as droughts, can result in several rings forming in a single year—or alternately, “missing” rings entirely. Further, mistakes are easily made in counting: The presence of a tree ring may be barely visible and easily missed, depending on the side of the tree that the bored sample is retrieved from.

If dendrochronologists cannot determine how the trees match up, what is the solution? They radiocarbon-date the growth rings to determine their approximate age. But what are radiocarbon-dating results verified against? Growth rings! This is, of course, circular reasoning.